[kor] Accelerator-Aware Kubernetes Scheduler for DNN Tasks on Edge Computing Environment

서론

- 엣지 컴퓨팅 서비스는 데이터가 발생하는 장비에서 다양한 컴퓨팅 작업을 수행할 수 있게 해주며, 이로인해 원격지에 위치한 클라우드 서버로 원본 데이터 전송을 피함으로 지연 시간 및 데이터 전송에 필요한 네트워크 대역폭을 줄여주는 장점이 있습니다.

- 이러한 장점에도 불구하고 분산 환경에서 분리되어 관리되는 장비의 특성으로 인해서 전체적인 서버들을 관리하는 것에 어려움이 있으며 엣지 장비들의 특성이 다양해질 경우 각각의 특성에 맞는 작업 스케줄링이 어려워지는 단점이 있습니다.

- 분산 컴퓨팅 환경에서 대규모의 자원을 효율적으로 관리하기 위한 오픈소스 기반 소프트웨어 중 Kubernetes는 컨테이너 기술에 기반하여 엣지 장비 및 클라우드 서버에 존재하는 대규모의 서버 자원을 함께 관리할 수 있도록 합니다.

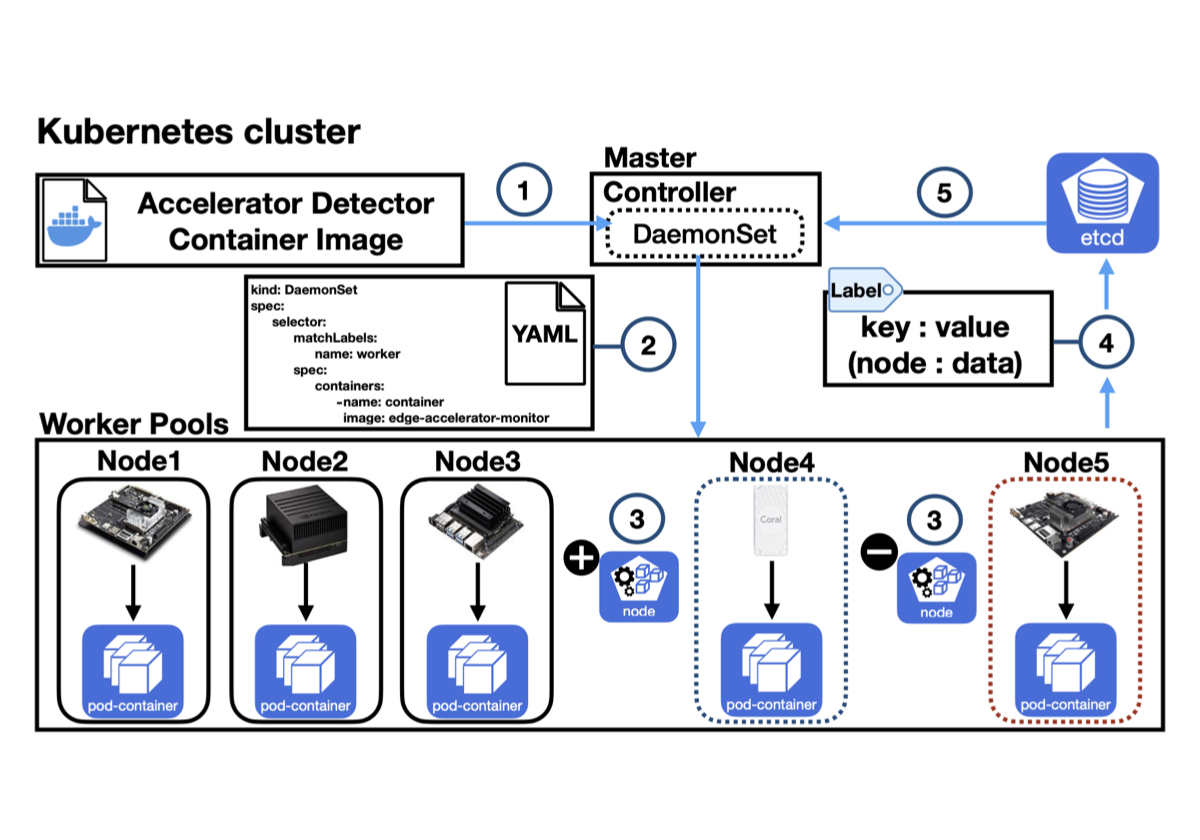

- 하지만 Kubernetes는 리소스 정보에 대한 지원이 제한적입니다. 그래서 워크로드와 하드웨어 특성 간의 복잡한 관계를 모두 참조할 수 없고 Kubernetes 스케줄링 방식에 제한이 따른다 이를 해결하기 위해 Kubernetes용 자동 엣지 가속기 하드웨어 감지기를 구현하였습니다.

[Kubernetes용 자동 엣지 가속기 하드웨어 감지기 - reActor]

구현방법

-

Docker install

sudo apt-get install -y apt-transport-https ca-certificates curl gnupg-agent software-properties-common sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=arm64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update sudo apt-get install -y containerd.io docker-ce docker-ce-cli -

**Kubernetes install**

sudo apt-get update && apt-get install -y apt-transport-https curl sudo curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - sudo echo deb http://apt.kubernetes.io/ kubernetes-xenial main > /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubelet kubeadm -

**Disable kubernetes container swap,zram**

sudo swapoff -a sudo rm /etc/systemd/nvzramconfig.sh - **Kubernetes cluster setting (master node)**

- Cluster api initialization on the master node and the token is issued.

sudo kubeadm init --apiserver-advertise-address=[master ip] --pod-network-cidr=10.244.0.0/16 --kubernetes-version=v1.18.14sudo mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config- How to reissue a token

sudo kubeadm token create --print-join-command - **Kubernetes cluster setting (worker node)**

- Join the cluster using the token value from the worker node.

sudo kubeadm join [master ip : port] --token [token data] --discovery-token-ca-cert-hash [token hash data]- In Google Coral TPU device, execute join after setting cgroup memory

sudo vi /boot/firmware/nobtcmd.txt add line >> cgroup_ena vv b ble=cpuset cgroup_enable=memory cgroup_memory=1 sudo reboot - **Flannel network plugin install (master node)**

- Tasks to configure the container’s network and assign an IP

sudo kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.13.0/Documentation/kube-flannel.yml -

**worker node role setting (master node)**

sudo kubectl label node [node name] node-role.kubernetes.io/worker=worker -

**Kubernetes cluster setting check (master node)**

sudo kubectl get nodes -

**Kubernetes ServiceAccount, Daemonset**

Manage kubernetes clustered hardware device nodes.

Hardware information extraction and automatic labeling.

- **ServiceAccount.yaml**

-

This is a file that grants permission to access the deployed accelerator information extraction container and label its own node.**

apiVersion: v1 kind: ServiceAccount metadata: name: accelerator-manager --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: accelerator-manager rules: - apiGroups: [""] resources: ["nodes"] verbs: ["list", "get", "patch", "update", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: accelerator-manager subjects: - kind: ServiceAccount name: accelerator-manager namespace: default roleRef: kind: ClusterRole name: accelerator-manager apiGroup: rbac.authorization.k8s.io

-

- **AMD64-Daemonset.yaml**

-

This is a file that enables the accelerator information extraction container to be deployed on NVIDIA Jetson TX1, TX2, Nano, Xavier and Google Coral TPU device nodes with ARM64 architecture.

apiVersion: apps/v1 kind: DaemonSet metadata: name: edge-accelerator-monitor-daemonset namespace: default labels: name: edge-accelerator-monitor-daemonset spec: selector: matchLabels: name: custom-container template: metadata: labels: name: custom-container spec: nodeSelector: kubernetes.io/arch: arm64 serviceAccountName: accelerator-manager volumes: - name: path1 hostPath: path: /etc/hostname - name: path2 hostPath: path: /etc/nv_tegra_release - name: path3 hostPath: path: /sys/kernel/debug/usb/devices containers: - name: edge-accelerator-monitor image: kmubigdata/edge-accelerator-monitor securityContext: privileged: true volumeMounts: - name: path1 mountPath: /node_name - name: path2 mountPath: /NVIDIA_driver_version - name: path3 mountPath: /TPU_device_ID env: - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName restartPolicy: Always

-

- **ServiceAccount.yaml**

-

**How to apply a file**

kubectl apply -f ServiceAccount.yaml kubectl apply -f ARM64-Daemonset.yaml -

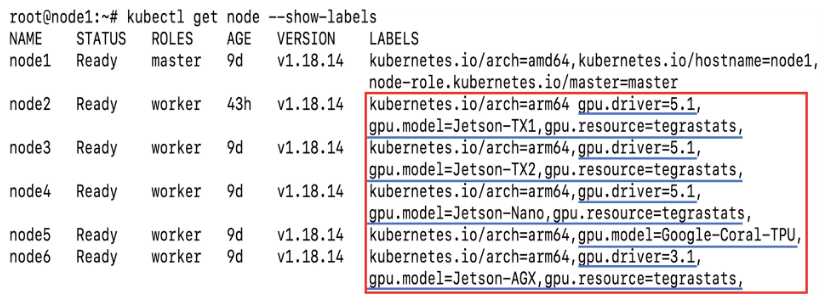

**How to check Daemonset, pod, container, label**

kubectl get daemonset kubectl get pod -o wide kubectl get node --show-labels -

Result

- Appendix

- Covers devices from NVIDIA Jetson and Google Coral TPU devices with ARM64 architecture.

- Those files are a script file and Dockerfile for extracting hardware information from containers and labeling the information automatically on nodes.

(NVIDIA Jetson : GPU model, GPU driver, GPU resource check method, Google Coral TPU : GPU model, Vendor id, Product id)

-

Dockerfile

https://github.com/ddps-lab/edge-accelerator-monitor/blob/main/MonitorContainer/ARM64/Dockerfile

docker pull kmubigdata/edge-accelerator-monitor -

hw-monitor-automatic-lableing 스크립트

-

Demo video site

Source code & Docker image

- Github URL : https://github.com/ddps-lab/edge-accelerator-monitor

- Dockerhub URL : https://hub.docker.com/r/kmubigdata/edge-accelerator-monitor

![[kor] Accelerator-Aware Kubernetes Scheduler for DNN Tasks on Edge Computing Environment](/assets/images/wiki/2022-09-30-dnn-task-edge/thumbnail.png)